Arushi Sharma Frank advises NVIDIA Inception startup Emerald AI, which develops software to help data centers become grid assets, as well as technology firms working with utilities to integrate grid-edge solutions.

The same planning instincts, operational conversations and system design principles that helped distributed energy earn its place on the grid must now be applied to the infrastructure demands of artificial intelligence. We’ve already lived through the work of proving that resources outside the substation fence can be real contributors to grid reliability — building controls, thermostats, batteries, rooftop solar, virtual power plant portfolios. What mattered wasn’t the technology alone, but its ability to deliver visibility, control and verifiable response when it counted.

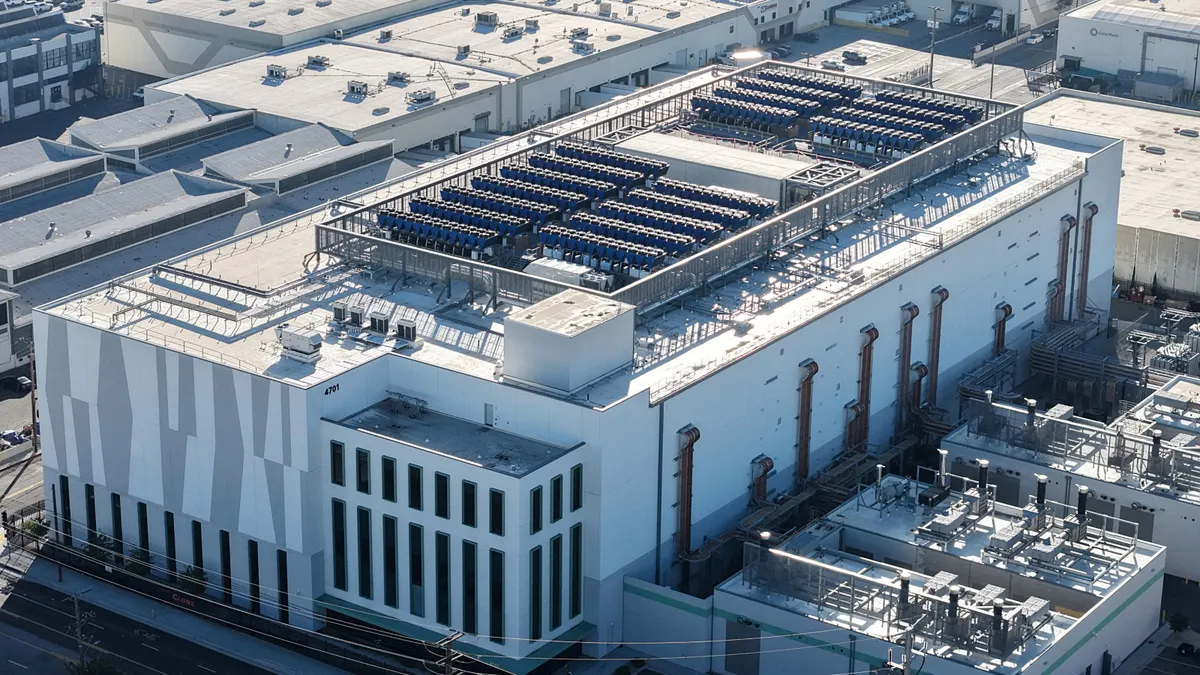

We are entering a similar moment with AI data centers, which arrive with load profiles that are shaping up to be large and urgent, but also increasingly dispatch-aware to the grid and power efficiency-aware to the providers of software and hardware behind the fence. The architecture is different. The topology is different. But the “ask” is familiar: allow us to participate in grid stress management — not just consume — and the capital will flow to the solutions that enable flexible, grid-supportive outcomes. With the right planning framework, flexible assets can be integrated into the grid’s living system.

That vision requires us to extend the same logic we’ve spent a decade refining — how we plan for flexibility, how we model load shapes, how we co-invest in infrastructure, and how we compensate response — across all sides of the grid, not just at the edge. We’ve certainly spent years proving that distributed energy can perform, so let us spend only months achieving the same for large load users. The opportunity awaits to unlock a different grid future, in which AI systems and human communities are powered by the same infrastructure, not competing versions of it. Capital flows in this future toward shared reliability outcomes, not toward expensive off-grid isolation. Our grid policies are an invitation for capital investment in the grid edge and in data center load response, and both investments yield value-based returns in the shape of virtual power plants and grid-responsive AI load.

We are already seeing where the grid is strained and which loads contribute to system risk and which can be responsive, dispatchable and resilient. We are already seeing capital flow toward both backup and interactive infrastructure. We can choose to unlock that participation, or we can choose to let the workarounds proliferate.

Reimagine interconnection and hosting capacity methods for flexible loads

It is no longer sufficient to run load interconnection studies on the assumption of a fixed, unchanging, 24/7 draw. That’s not how today’s demand behaves, nor should we motivate that behavior. And it’s certainly not how AI data centers will behave when the right controls are enabled. Compute and non-compute workload performance and site-level flexibility can be aligned. We’ve seen AI customers demonstrate load modulation without breaking compute operations. But utility interconnection pathways must accept these profiles in the first place. We should be clear-eyed about what this requires: updated screening tools, revised reliability models and planning processes that accept flexibility declarations with enforceable parameters. If a site operator can curtail or shift 40 MW with advance notice and software-based controls, or place a battery next to the load to do the shaping work so the load itself does not need to curtail, the grid should not plan as if that site is inflexible. And if we design connection queues and hosting maps around worst-case peaks only, we will get worst-case costs and delays.

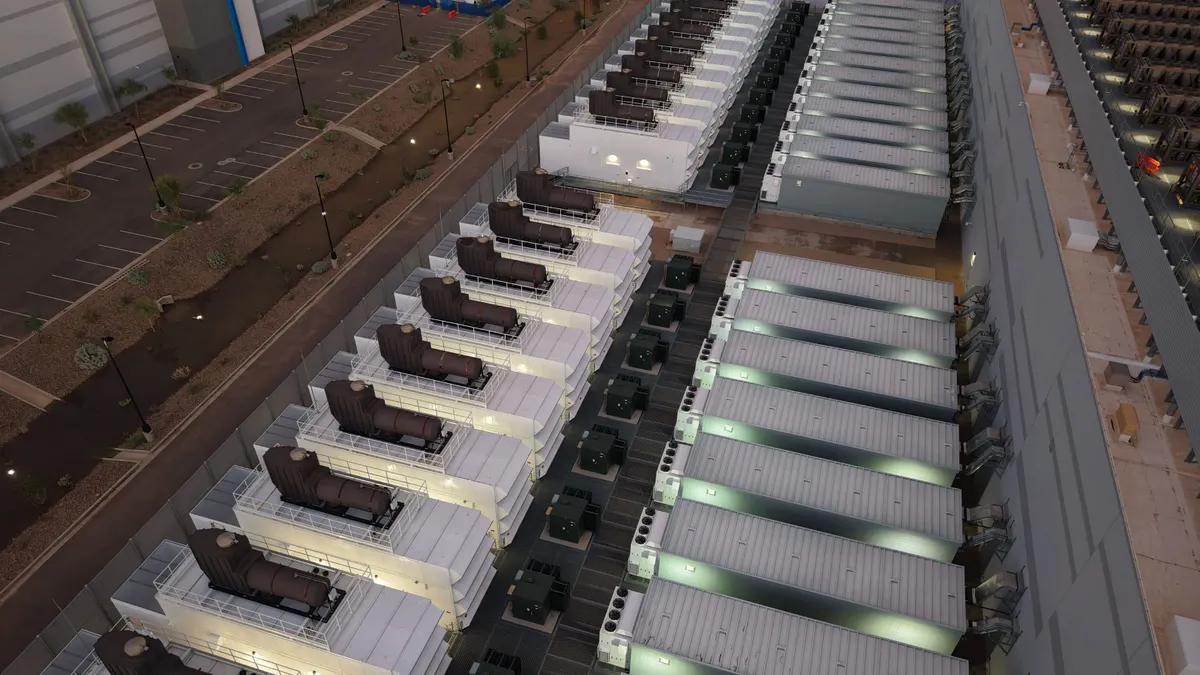

Create co-location pathways with grid-side or long-duration storage

The instinct to self-provide is not going away. In every jurisdiction where interconnection is slow or capacity is constrained, the market is responding the only way it knows how: by building behind-the-fence solutions — batteries, diesel gensets, gas peakers. The question is whether those assets will help the grid or simply bypass it. We should design for the former. Utilities should develop technical interconnection standards, standard offer tariffs and integrated planning models that support co-location of large-scale storage with load. These storage assets can provide grid services, shave peaks and help avoid curtailment. But only if the grid is willing to treat co-located storage — and natural gas generators — as more than a private insurance policy.

Set standards for fault ride-through and grid services participation from non-traditional facilities

There are thousands of buildings, campuses and compute clusters that were never designed to serve the grid — but now can. These facilities already have the capabilities: backup generators, battery banks, power control systems, software-defined operations. What they lack is a clear path to participate. We can fix that. Interconnection rules should allow for ride-through behavior from these systems. Ancillary service markets should invite aggregated load with dispatchable response. Invert the model: rather than designating who is allowed to help, define the performance that helps — and open the door to whoever can meet it. The “who” can be aggregated distributed resources; it can be compute campuses — invite the participation and the right resources will come.

Align cost-sharing and offtake structures with actual system benefit and risk

Right now, most interconnecting load is asked to bear the full cost of infrastructure upgrades, even when those upgrades solve broader grid problems or serve multiple customers. This is an inefficient and oddly punitive outcome that creates a disincentive for precisely the types of customers who are willing to invest in flexible, grid-serving design. Instead, utilities and commissions should adopt frameworks that allocate upgrade costs based on dispatch characteristics, controllability and demand flex contribution to system reliability. If a customer lowers system peak by 30%, that should matter. If they bring long-duration storage to self-provide at peak, or are willing to accept curtailment, that should matter. The same applies to power purchase risk. When AI customers face long-term, inflexible energy procurement contracts that ignore workload variability, we risk that they simply walk away — making power deals uneconomic at scale. Let’s modernize those terms by offering indexed pricing structures that reward grid-supportive behavior. Recognize the load shapes of the future — not just the shapes of the past.

Conduct new system stability studies that incorporate compute and non-compute dispatch profiles

Today’s studies are still anchored in outdated assumptions. They model data center load as a flat wall of constant demand. That’s not what these sites do. Compute ramps up and down. Cooling is weather-sensitive. Workloads can queue, pause, or reschedule. There are differences between training and inference, and even within those categories themselves. There are differences between AI and traditional cloud. The system studies need to catch up. At a minimum, planning authorities should require developers to submit load shape scenarios based on anticipated operations. These scenarios can and should be validated by real telemetry once sites are live. We already do this for generation. It’s time to do it for load.

Support ‘Energy for AI’ and ‘DER as infrastructure’ through clear, tech-agnostic, service-specific policies

Everything above rests on this final principle: if we want private capital to fund the grid of the future, we must be clear about what earns value. Flexibility must be treated as infrastructure. Energy for AI must be judged not by how much it uses, but by how well it performs. We need to set standards for what good participation looks like — and allow market, utility and community stakeholders to meet those standards in diverse, verifiable ways. That means policies that are tech-agnostic on whether flexibility comes from a 100-MW hyperscale campus or a thousand residential batteries. What matters is the reliability contribution. The system value. If a resource shows up when the grid is straining, it counts.