Remi Raphael is vice president of AI transformation and chief AI officer for the Electric Power Research Institute.

The last two years have seen seismic changes in the utility sector, rivaling those we witnessed during the era of energy deregulation. After decades of flat load growth on the U.S. grid, electricity demand has increased across the economy, as AI/data centers, industrial onshoring and the electrification of transportation converged.

U.S. power consumption by data centers is expected to account for nearly half of the growth in electricity demand between now and 2030, according to the International Energy Agency. AI is transforming every industry, and the energy system of the future will need to continue evolving to meet this new demand for power, while ensuring reliable and affordable electricity for all. This will take continued collaboration across the energy and technology industries.

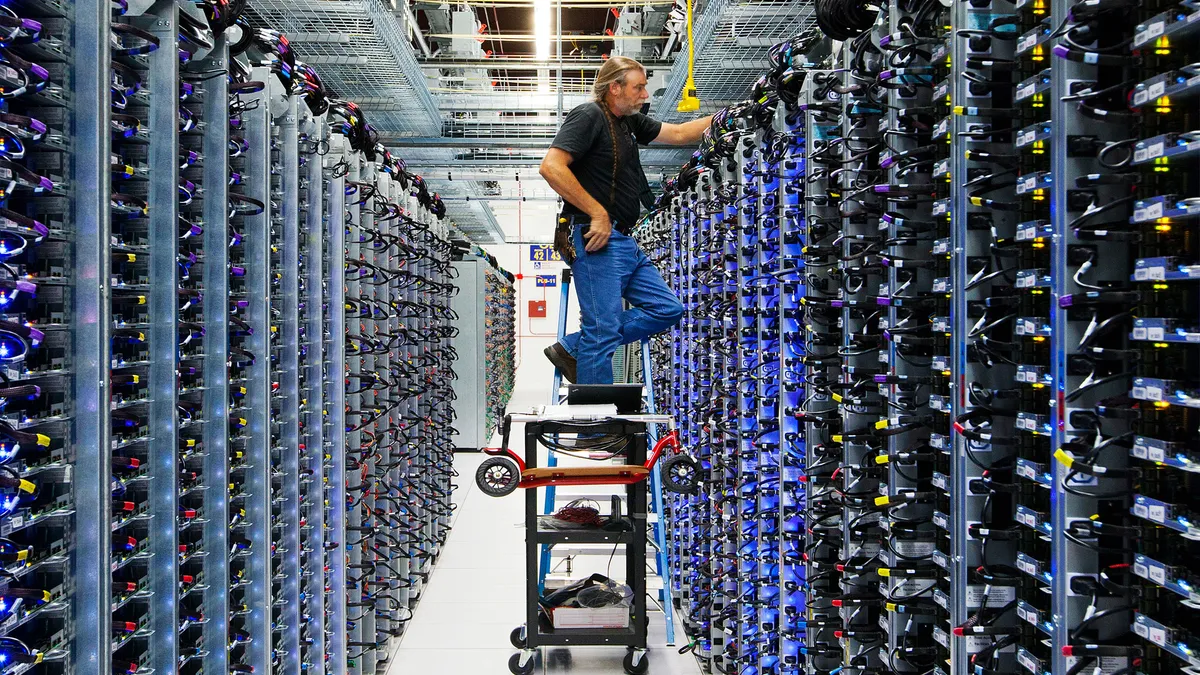

This energy evolution starts with new ways of thinking. Rapid growth in data center demand — where a single AI rack at peak load can use as much power as about 100 typical U.S. homes — has exposed mismatches between grid expansion and data center development timelines. Connecting these new data centers to the grid can sometimes take years due to interconnection queues, transmission buildout timelines and supply chain challenges.

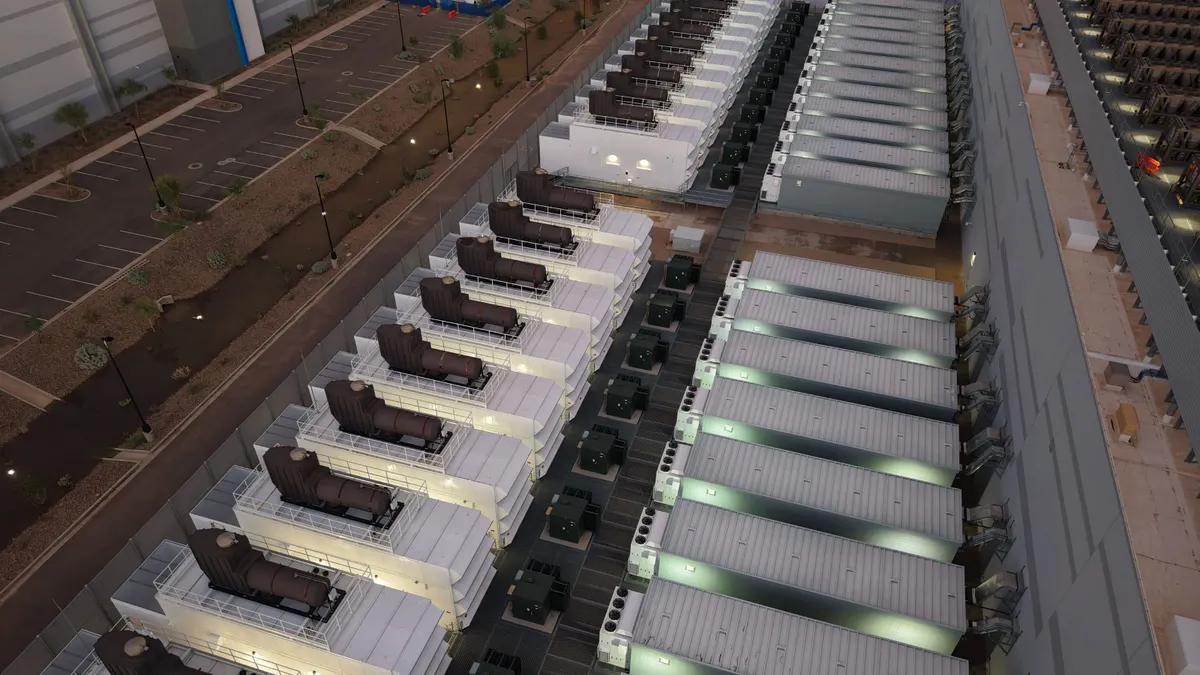

Congress is poised to take up permitting reform, which can help accelerate these timelines; however, the timing of passage remains unclear. In the interim, solutions are already being tested. EPRI is helping uncover real-world solutions through DCFlex, bringing together more than 60 utilities, researchers, hyperscalers and tech innovators to make data centers part of the solution for grid reliability. Think of the grid as a superhighway. It must always remain open and free from traffic congestion. Data centers can throttle back performance when the grid is under stress without compromising performance. It's like taking 18-wheelers off the road during rush hour.

Recent studies demonstrate that load flexibility for AI data centers could unlock 100 GW of new U.S. data center capacity in the United States without requiring extensive new generation or transmission infrastructure — enough to meet projected AI growth for the next decade.

Several DCFlex demonstration projects are already underway in Phoenix, Charlotte, and Paris, France, with more anticipated in 2026. Early results for the Arizona project showed a 25% energy reduction over three hours, highlighting the potential for an AI data center to provide grid relief during a peak system event, such as a hot summer day with high power demand.

The AI growth isn’t limited to the U.S. The International Energy Agency forecasts global data center energy use in 2030 will double from 2024 levels to 945 TWh, slightly more than Japan’s total electricity consumption today.

As data center loads grow, leveraging the integrated grid will be key to balancing speed, cost, environmental performance and long-term operational excellence. Their presence as intelligent, grid-aware energy users is integral to modernizing and improving the grid.

Utilities are also integrating AI into power system planning and operations. This enables humans to do what humans do best — creative thinking and dealing with new or unforeseen situations, while computers do what computers do best — quickly processing a large volume of data and accurate computations.

However, AI should not be seen as a replacement for humans in the energy sector.

As part of an independent, first-of-its-kind assessment of AI’s potential in the power sector, EPRI has released domain-specific benchmarking results that showed the limitations and opportunities of this technology. Benchmarking with electric power-specific questions helps assess how well large-language models (LLMs) understand and respond to technical, regulatory and operational questions that utility employees face daily. While AI has great potential in the sector, expert oversight remains imperative, especially with open-ended questions focused on realistic scenarios of power utility operations. Those open-ended questions could result in less than 50% accuracy in some cases.

Among the report takeaways:

- Multiple-choice questions (MCQs) provide a strong but incomplete baseline. On the MCQs, leading frontier models scored 83% to 86%, broadly consistent with their performance on external math and science benchmarks. However, these scores benefit from the structure of MCQs and don’t necessarily represent proficiency.

- Open-ended questions exposed a reliability gap. When the same questions were asked in open-ended form instead of MCQs, average accuracy dropped on average by 27 percentage points. On expert-level questions, top models only scored between 46% to 71%.

- Open-weight models are closing the gap. These are LLMs whose trained parameters — known as weights — are publicly available. While typically one generation behind proprietary Frontier Models, they are rapidly improving. Their ability to be self-hosted and contained can offer utilities not only valuable deployment flexibility but also privacy.

- Web search modestly improves accuracy. Allowing models to search the web boosted scores slightly (2% to 4%), while also introducing the risk of retrieving irrelevant or misleading information.

This analysis is just the start. In the coming months, EPRI plans to evaluate domain-specific models that are trained or fine-tuned on grid data and workflows. In the next few years, we anticipate various AI systems will continue to evolve and be adopted by the utility sector, helping to synthesize information quickly, forecast and optimize tools to improve planning forecasts and provide decision support for grid operators.

The path forward is clear: embracing innovation at every level is key to keeping pace with an AI-powered world. This isn’t just about meeting demand; it’s about reimagining the grid as a dynamic, intelligent system that can flex, adapt and thrive. By pairing human expertise with AI-driven insights and forging collaborations across industries, we can build an energy ecosystem that is reliable and affordable for all.

As part of our public benefit mission, EPRI is taking a leadership role in bridging the gap between AI research and development and power industry applications. The stakes are high, but so is the opportunity — and the time to act is now.